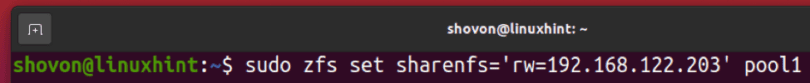

So far, I'm not sure if these failures are a result of our work-in-progress change that I linked to, or due to a tangential problem in the code. With this in place, the /etc/dfs/sharetab file remains consistent with the contents of exportfs and the number of shared filesystems that are created, but I've seen cases where zfs set sharenfs=off or zfs destroy will fail.

Unmounting and remounting the NFS share on the client side brings the situation back to normality.

check SHA256 of the file on the ZFS dataset mountpoint the hash is correct.check SHA256 of the file on both the GlusterFS nodes the hash is correct.Moving down through the various layers I did: If I try to access the same file from the other application server mounting the same NFS share, I get the correct file.

#OPENZFS SHARENFS PDF#

With wrong I mean a complete and intact file (no bitrot or other corruption), e.g.: an intact XML file instead of a PDF one. are correct and reflects the real (wrong) file. Excluding the filename, other metadata like file size, file creation date, etc.

When this happens (luckily only a couple of times as of now), logging on the application server, I can copy the (wrong) file from the (right) path to local storage for further analysis and the file content is still wrong.

#OPENZFS SHARENFS FULL#

Workload is almost write once/read sometimes: files are written to storage and almost never modified sometimes they are read to serve a request.īefore returning the data to the requesting client, the application calculates the file SHA256 hash and compares it with the one stored in its database.Įven if the application is using the full path to the file, sometime one node get completely different (and wrong) file contents, eg.: the application asks for file /data/storage_archive/20220802/filename and gets data from file /data/storage_archive/RANDOM_DATE_HERE/RANDOM_FILENAME.

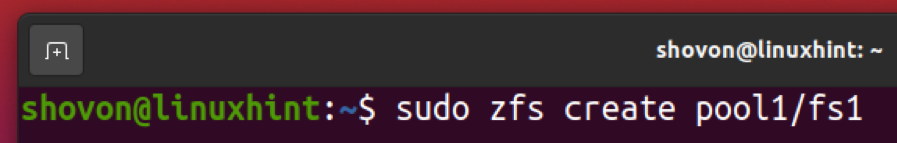

I have two application servers retrieving files stored on an NFS share. I set up an NFS server on top of a replicated GlusterFS volume on top of mirrored ZFS pool serving files to a couple of application servers.

0 kommentar(er)

0 kommentar(er)